Agentic AI is projected to autonomously resolve 80% of common customer service issues by 2029, while helping organizations cut operational costs by about 30%, according to Gartner Inc.

Now imagine your AI voice agent starting the day by handling a large volume of outbound calls: confirming appointments, reminding customers of renewals, or collecting feedback. It works continuously in the background, never needing a break, and consistently delivering results.

But how do you know it is actually performing well?

As organizations increasingly deploy AI voice assistants to manage everything from reminders and renewals to surveys and follow-ups, the question isn’t if AI will touch your outbound customer service; it’s how well it will perform.

This matters because:

- You're ahead of the curve: If your outbound voice strategy doesn’t include tracking key performance metrics, you’re flying blind.

- AI scales, but only if you optimize: Voice agents can handle high volumes of calls 24/7; however, without metrics, script updates, and refinements, they may still underperform human agents in crucial areas.

- Trust and experience matter: Your AI must come across as reliable, efficient, and customer-friendly.

In this blog post, we will explore the metrics that matter most for outbound calls, why they are important, and how tracking them can improve both customer experience and business outcomes.

Why Metrics Matter for AI Voice Agents

You’re not waiting and reacting, but actively reaching out. When AI takes the lead in outbound communications, getting it right is critical. Here's why tracking performance metrics is essential:

1. Outbound Is Proactive

Outbound calls don’t have the luxury of timing; they need to land just right. A study by Deloitte found that generative AI in contact centers reduced call durations by around three minutes on average, freeing agents to focus on personalized customer interactions rather than rote summaries or admin work.

Why it matters: shorter, sharper calls mean your AI hits the right note with better timing, relevance, and content that resonates.

2. AI Must Be Optimized Through Data

Unlike a human agent who can be coached directly, your AI improves only through measurable feedback. Metrics are not optional; they are the sole input your AI can use to refine performance.

Example: If your outbound AI is making renewal reminder calls and you notice a low pickup rate at certain times of day, call-time distribution metrics can guide adjustments. Shifting those calls to higher-response windows can instantly improve connection rates and outcomes.

3. Metrics Fuel Better UX, Compliance, Efficiency, and ROI

The result is visible in the numbers.

- Cost & Efficiency: AI voice systems can automate 30–60% of routine inquiries, reducing training time by 35–50%, and cutting staffing costs by up to 42% in under a year, while lifting customer satisfaction by 18%.

- ROI in Real Results: Verizon’s AI tool improved agent efficiency, pushing nearly 40% sales increase while cutting call times.

- First Call Resolution (FCR) Power: FCR remains the single most influential metric for satisfaction.

Putting It All Together

- Outbound isn’t casual. You’re interrupting someone’s day. If your AI isn’t timely, personal, and well-crafted, calls may fall flat.

- AI gets better through numbers, not hint-based corrections or pep talks. Fewer insights = slower improvement.

- Metrics translate tech into tangible value: less cost, smoother CX, policy compliance, and business wins like conversions and increased renewals.

If you’re deploying outbound AI voice agents and not tracking the right metrics, you’re steering without a dashboard.

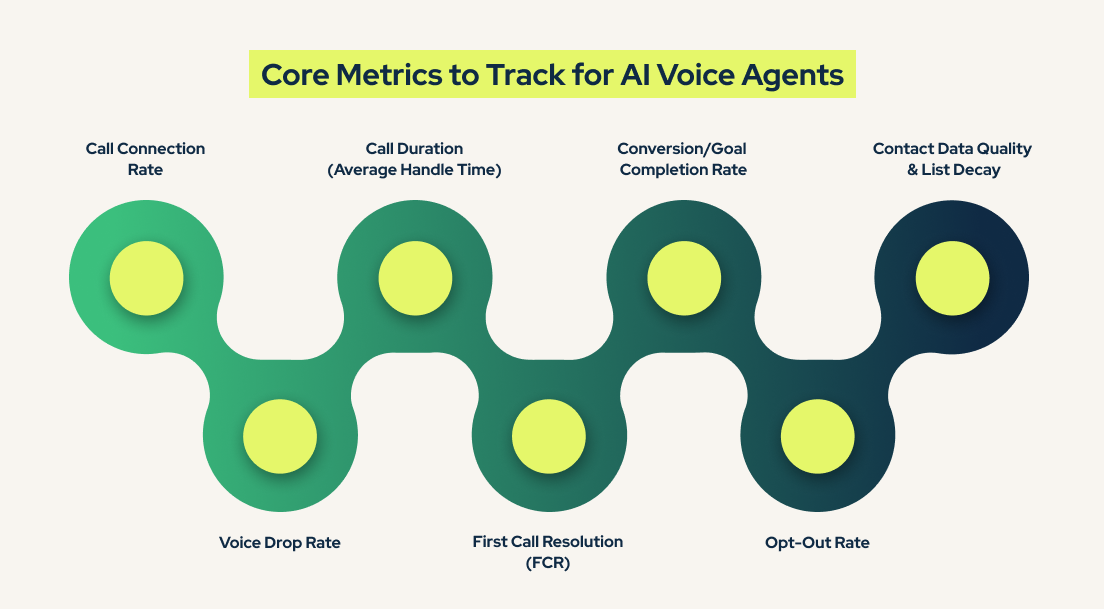

Core Metrics to Track

Not all metrics are equal, and in outbound AI voice operations, it’s easy to track the wrong ones or drown in vanity data. Below are the key performance metrics that actually move the needle.

Each one gives you insight into a different part of the call lifecycle: from connection, to engagement, to outcome. Together, they give you a full picture of how your AI voice agent is performing and what you can do to improve.

1. Call Connection Rate

- What it is: The percentage of outbound calls that connect with a live person.

- Why it matters: It’s your entry point. A low connection rate hints at issues with contact lists, timing, or dialing strategies.

- Benchmarks:

- B2C campaigns: Typically see 10–30% connection rates.

- B2B efforts: Generally range between 15–40%.

- Warm leads: Tend to deliver 40–60% connection rates.

- B2C campaigns: Typically see 10–30% connection rates.

- Cold outreach: Often falls short at 5–15%.

2. Voice Drop Rate

- What it is: The percentage of answered calls dropped by customers within the first few seconds.

- Why it matters: Signals whether your AI sounds natural, or if callers are put off by tone, pacing, or delays.

- Ideal threshold: Keep this as low as possible to ensure smooth call openings.

3. Call Duration (Average Handle Time)

- What it is: Average length of successfully completed calls, including talk time and wrap-up tasks.

- Why it matters: Reflects both engagement and efficiency. Too short may mean disengagement, too long may mean inefficiency.

- Benchmark: Aim for an average handle time of around six minutes.

4. First Call Resolution (FCR)

- What it is: The share of calls resolved or objectives met during the initial contact, with no follow-ups needed.

- Why it matters: It is one of the most impactful indicators of efficiency and customer satisfaction.

- Benchmarks: Many industries aim for 70–75% FCR rates.

5. Conversion/Goal Completion Rate

- What it is: The percentage of calls leading to a successful outcome (payment, booking, survey, etc.).

- Why it matters: The clearest business-performance indicator of your AI voice agent.

- Typical ranges: Outbound conversion rates vary but often land around 2–20%, depending on lead type, industry, and campaign specifics. For example, sales-driven operations have seen results in this range.

6. Opt-Out Rate

- What it is: The percentage of users who request to be removed from further outreach.

- Why it matters: High opt-out rates signal poor experience, over-targeting, or tone issues, and can impact compliance (TCPA, GDPR, etc.).

7. Contact Data Quality & List Decay

- What it is: Measures like list penetration rate, wrong-number ratio, and right-party contact rate.

- Why it matters: The integrity of your contact database directly affects outcomes.

- Benchmark insight: B2B contact data decays at about 30% annually, while B2C data degrades even faster—50–70% per year.

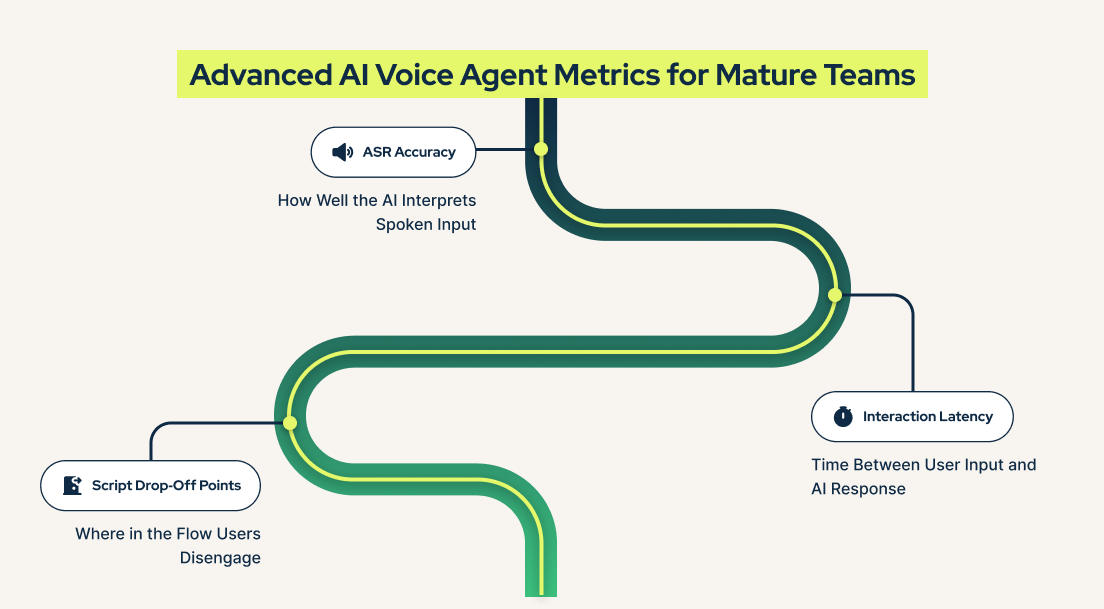

Bonus: Advanced Metrics for Mature Teams

Once your outbound AI voice operations are running smoothly basic KPIs, there’s a deeper layer of intelligence you can tap into. These advanced metrics get you closer to seamless, human-quality interactions and strategic optimization.

1. ASR Accuracy: How Well the AI Interprets Spoken Input

What it is: The precision of Automated Speech Recognition (ASR), typically measured as Word Error Rate (WER) or transcription accuracy.

Why it matters: If the AI mishears what’s said, everything downstream (intent detection, sentiment, goal completion) gets derailed.

- Benchmarks: Leading ASR systems achieve around 80–90% average accuracy under ideal audio conditions; 10–20% WER is common in real-world use.

- Baseline goal: Strive for at least 80% accuracy, especially under noisy or conversational settings unique to outbound calls. Poor transcription quality, below the industry baseline of ~72%, is a frequent source of frustration.

2. Interaction Latency: Time Between User Input and AI Response

What it is: The delay from when a user stops speaking to when they hear the AI’s reply. Also known as “voice-to-voice latency.”

Why it matters: Human dialogue relies on milliseconds of rhythm. Even modest pauses can feel unnatural and hurt engagement. Users notice latency beyond 200 ms, and performance dips sharply after 500ms, where conversations start to feel robotic.

3. Script Drop‑Off Points: Where in the Flow Users Disengage

What it is: Identifying specific points in your call script or flow where the user stops engaging: hang-ups, silence, unexpected transitions.

Why it matters: These drop-off points highlight script friction, poor phrasing, or conversational mismatches that would be invisible without tracking.

How to measure: Monitor user behavior at each script node, e.g., “after intro,” “during info retrieval,” “post-prompt” to isolate where and why people disengage.

Real-world example: Suppose an appointment reminder AI consistently sees a spike in hang-ups right after a phrase like “for security, please confirm your date of birth.” Tweaking the phrasing to something like “just to ensure I get you booked correctly...” could reduce drop-offs significantly.

Why This Matters for Mature Teams

These advanced metrics are essential for optimizing beyond the basics. High ASR accuracy powers better understanding. Low latency maintains conversational rhythm and reduces abandonment and frustration. And tracking scripted drop-off points lets you refine scripts iteratively, improving engagement and success at each step.

Example Use Case: Subscription Renewal Campaign

Scenario

A large outpatient clinic used AI voice agents to manage routine appointment renewals such as physical check-ups, follow-up diagnostics, and recurring treatments (e.g., physiotherapy or allergy shots).

Initial Metrics

- Low goal completion: Many patients missed or ignored appointment renewal calls.

- High transfer rate: The AI often escalated calls to human staff for clarification or booking help, increasing admin load.

Actions Taken

To improve call outcomes and reduce operational strain, the team implemented several enhancements:

- Refined Call Script: Simplified medical language and personalized messaging based on past visit history (e.g., “Hi Sarah, this is a reminder to renew your annual wellness exam.”)

- Improved Intent Recognition: Upgraded the voice agent to better interpret varied patient responses like “Can I do it next week?” or “I don’t remember when it was.”

- Retry Logic: Introduced smart retries; calls were automatically rescheduled if the patient didn’t respond the first time (e.g., retry after 24 hours, then again at a different time of day).

Results Achieved

- Increase in appointment renewal confirmations

- Decrease in escalations to human staff

- Average booking time reduced

Tips for MonitorBring & Improving AI Voice Agent Metrics

To unlock the full value of your outbound AI voice operations, you need a strategy for tracking, analyzing, and optimizing performance beyond just measuring numbers.

1. Set Benchmarks Per Campaign

Every campaign (e.g. renewals, payment reminders, and surveys) has unique objectives and audiences. Establish benchmarks based on campaign goals and available data.

- Why it matters: Benchmarks help calibrate expectations and guide improvements. Compare your performance data to industry standards and set “achievable targets” that stretch your team strategically.

- Concrete application: If your Average Handle Time (AHT) is currently 15 minutes and the industry norm is 6 minutes, instead of aiming straight for the ideal, set a more realistic near-term goal like 10 minutes while monitoring key quality indicators such as FCR or CSAT.

2. A/B Test Your Scripts Regularly

Small tweaks in phrasing or structure can produce measurable changes in performance. A/B testing lets you validate what works and what doesn’t.

- Real-world impact: Though not specific to voice AI, A/B testing scripts, such as comparing different opening lines or message styles, can improve key KPIs like connection, goal completion, or CSAT.

- Quick example: Try an opener that says, "Hi [Name], this is [Clinic] confirming your appointment. Is this a good time?" versus "Hello [Name], this is [Clinic]. I wanted to check in on your appointment. Do you need to make any changes?" Track responses and optimize based on engagement or conversion impact.

3. Integrate with CRM to Personalize Calls

A deep integration between your AI voice system and CRM can power personalization, leading to higher engagement and better outcomes.

- Why it matters: CRM integration enables agent (or AI) access to customer history, preferences, and context.

- Example: Begin calls with a line like, “Hi [Name], it’s [Your Service]. I see your subscription ends next week; should we set up your renewal now?” instead of a generic script that lacks depth.

4. Track Trends Over Time, not Just Daily Snapshots

Metrics vary daily. Contextualizing performance over time helps identify patterns, reveal campaign impact, and guide strategic decisions.

- Why it matters: Day-to-day volatility can mislead. Spotting trends helps you detect shifts like engagement dips or drop-offs early.

- Example: If renewal completion rate spikes after a script change, sustain that longer, monitor retention, and adjust for long-term impact.

Summary: Table of Best Practices

Conclusion

AI voice agents are powerful, but only when optimized with intention. Metrics aren’t just numbers; they’re the tuning dials for better conversations, smoother operations, and stronger ROI.

In outbound customer service, success comes from ongoing, data-driven refinement. Improving ASR accuracy, lowering response latency, or fine-tuning scripts may seem like small adjustments, but together they compound into meaningful results.

The best teams treat metric tracking and iterative improvement as a continuous practice, not a one-time setup. Track, test, and refine consistently, and your outbound calls will feel more timely, more relevant, and more effective for the customers on the other end.

Ukti AI is built for high-volume outbound voice campaigns that sound natural and deliver measurable results. We help you track, test, and refine every conversation so performance keeps climbing. Book a demo to see how we can make your voice strategy smarter, faster, and more effective.

.png)